Frame-by-Frame Animation in the Age of AI Text-to-Video

What is frame by frame animation?

Frame-by-frame animation refers to a process where images are created and sequenced together to produce the illusion of movement. Hand-drawn illustrations defined the early Disney films and gradually gave way to CGI in the mid-late 1990s. Animation studios still build their storyboards around keyframes, but they have some assistance from computers, rendering all of the frames in between.

The scene above comes from the 1973 French frame-by-frame animation, Planete Sauvage. Some will recognize it under the English title, Fantastic Planet.

Fantastic Planet was renowned for its use of an unusual cutout animation technique. Pre-drawn and colored paper cutouts were moved around and photographed, frame-by-frame, to create the unique and almost collage-like visual effect.

I point out this famous scene from Fantastic Planet scene because the cross fading technique they used bears some resemblance to current trends in AI video.

The film's surreal and existential themes doubled as commentary on the social and political attitudes of the bourgeois. It explored a psychedelic aesthetic with bizarre, dream-like sequences and vivid colors.

How has AI video impacted animation?

AI video generation is still relatively new for the public. Independent artists started using apps like neural frames in 2023 to create original music videos. But there's an open question about whether art from Stable Diffusion be used in bigger budget, cinematic releases.

Marvel tried their luck in 2023 during the opening credits of Secret Invasion. The text layovers faded in above the recognizable look and feel of morphing Stable Diffusion images. Fans pushed back, saying that the studio should pay their artists more and stop trying to replace them with AI.

Despite this resistance from fans, the greater sentiment towards AI video has been mixed among the public. There's a lot positive feedback expressed on Twitter, for indie artists like Ben Nash who use generative software to create original AI music videos.

Several popular AI video generation apps are available today, neural frames, Runway ML and Pika Labs. Each web app specializes in a different kind of animation technique. The explainer video below provides a step by step breakdown, showing how to generate animations in between keyframes using artificial intelligence.

Neural frames takes two key frame images and animates the “tweens”, or individual frames in between them.

Instrument stems, like a song’s snare or kick drum, can be isolated and set up to trigger changes in the speed, zoom, and rotation between frames of an animated sequence.

You don't have to go digital. It's easy to start with a paper flipbook, or photograph your art and turn them into gifs. You just need some basic drawing tools and the patience to continue from that first frame to the next frame.

If you don't have the time to draw and animate a full sequence by yourself...

Try neural frames for free and see how easy it is to make your own animated videos. Sign up today and join the free Discord community to see what other people are creating! We also have an AI image description generator, to read the context from your images.

Frame-by-Frame animation examples

Emile Cohl’s 1908 Fantasmagorie was one of the first ever animated short films. Cohl's hand-drawn animation of the line-figure moved through a world where everything was constantly shifting and changing.

Animators could bring magical stories to life. The imagination was set free from the constraints of ordinary life. A wine bottle could unfold into a flower whose stem could become the trunk of an elephant. For decades, a generation of artists grew up aspiring to draw and create animations of their own.

In 1937, Disney released Snow White as the first ever full-length and full-color animated feature film. With a frame rate of 24 images-per-second and a run time of 1 hour and 23 minutes, nearly 120,000 individual frames were drawn by hand to complete the long form animation project.

In this article we’ll have a look at current trends in frame by frame animation, including 2.5D and AI generated videos, to see how technology has evolved to serve animators of all skill levels.

The early origins of animation

The history of animated frames can be traced back nearly 5000 years, to the Bronze Age! Above is an unfolded panorama of a famous clay pot found in Iran. The curved surface features a sequence of line drawings where a goat jumps towards a tree to eat its leaves. The bowl can be placed on a rotating platform, like a potters wheel, to bring the short animation comes to life.

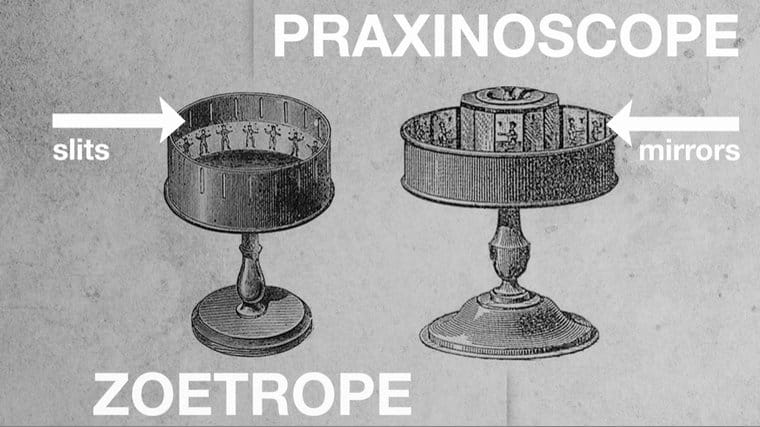

In more recent history, around 1833, a rotating animation machine called the phenakistiscope first made headlines in France. By 1866, the toy company Milton Bradley released a similar device called the Zoetrope, intended for home use.

During the 1890’s, a device called the praxinoscope was now able to project short animations onto a surface. It was eventually replaced by the photographic film techniques of the Lumier brothers.

Around this same period, filmmakers were experimenting with stop motion animation. A film's special effects team would used a “stop trick” in the middle of a moving picture, to manipulate the imagery that followed. They could create the impression of continuous, live action footage for the audience in this way.

Do animators still draw frames by hand?

Yes, the hand-drawn, frame-by-frame animation style is still popular and is still finding new ways to appeal to its target audience. In recent years, illustrated animations have sought to imitate digital mediums, while bringing their rich organic textures into play. Below are some examples that received critical acclaim in the last few years.

The 2019 Netflix movie Klaus was a hand-drawn 2D animation celebrated for its visual depth and shading. Many people assumed the film had been created with CGI and treated with digital textures to look organic. The ability to blur the lines between illustrations and CGI is a testament to the animation studio's skill.

Cartoon Saloon's 2020 film Wolfwalkers also made headlines for its rich hand-drawn landscapes. The team started with 3d rendered environments. Then nine animators were assigned to recreate every digital frame by hand, across thousands of sheets of paper.

Today, some of the biggest animation studios, like Studio Ghibli and Rebecca Sugar’s Steven Universe, sequence their hand-drawn animations in software like Toonz, OpenToonz, and Animaker. Their animation processes might also include ordinary programs like Adobe Photoshop and After Effects.

Ultimately, while some of the traditional animation studios story board with ink on paper, illustrated images are eventually migrated into digital animation projects later in the production pipeline. Of course, today you can also use AI Cartoon generators to achieve this effect.

Frame by frame animation evolved into CGI

Imagine the difficulty of joining an animation team back in the early days. You've been hired to manually illustrate each swinging limb in Pinocchio's walk cycle. The film's release date has already been announced and you're behind schedule.

CGI teams made it easier to crank out character animations, using 3d components that move and swivel between keyframes on a precise axis. Instead of drawing each frame manually, the speed and trajectory of motion is programmed. The software takes care of rendering all of the movement in between. What a relief!

The transition from cel frames to CGI was first apparent in Disney’s 1991 film Beauty and the Beast when they imitated a camera dolly in the castle ballroom. Most people don't know this, but the 3D ballroom was actually rendered by Pixar's engine, four years before their first feature film (Toy Story) came out in 1995.

Frame-by-frame animation has since migrated into mostly digital workflows, with artists drawing on electronic tablets and editing on monitors. Time-consuming manual techniques were automated, plus software included digital coloring, onion skinning, and access to audio-video timelines.

CGI can vary from 2D vector drawings to 3D models. Software like Adobe Animate, Toon Boom Harmony, and others have become industry standards today.

The introduction of artificial intelligence to this technology will open up an almost endless world of possibilities. We're excited to see what's coming next!

No VC money, just a small, hard-working team, in love with text-to-video.