Seedance 1.0: ByteDance’s fast AI video model - and why the music‑video world should pay attention

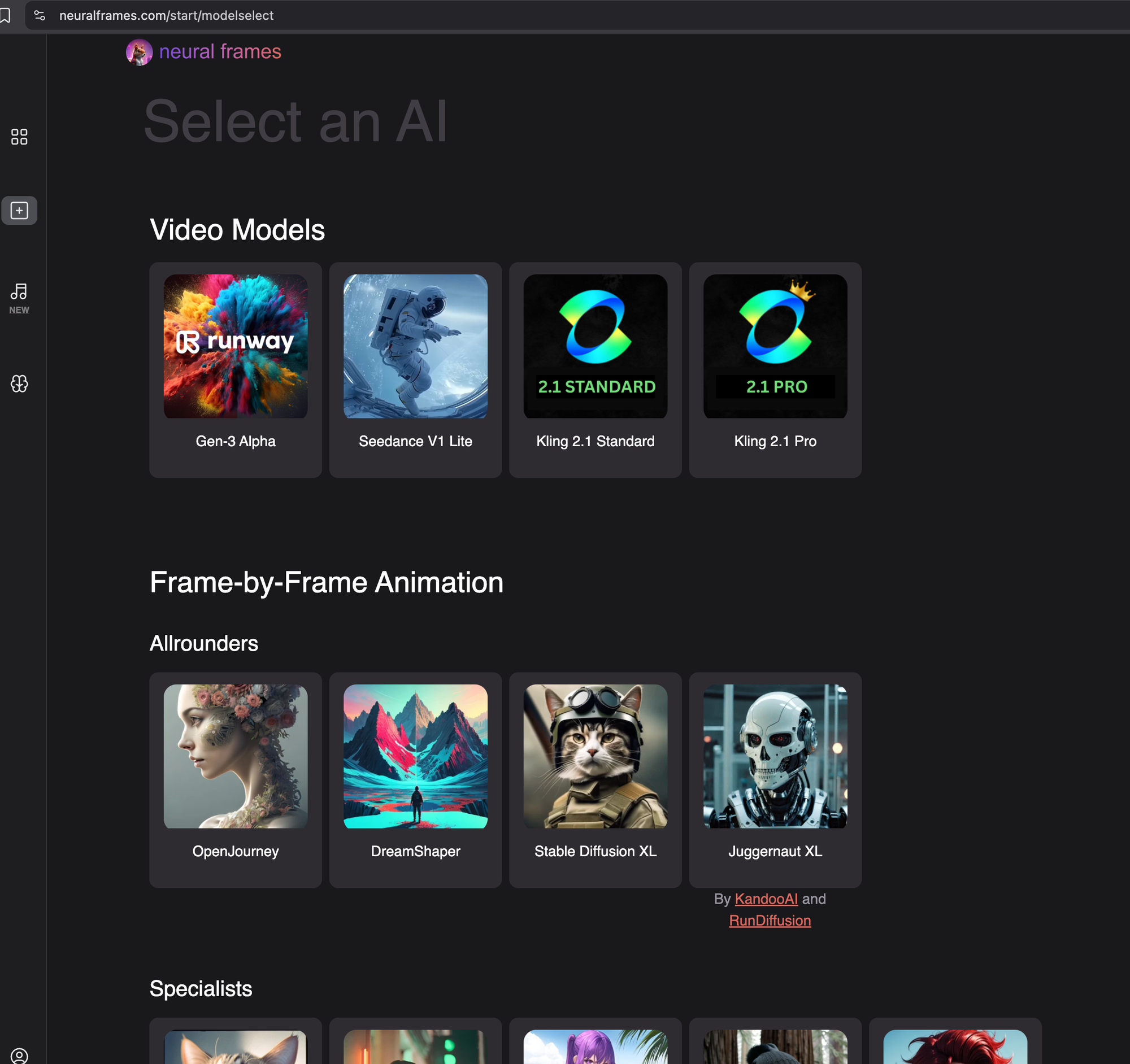

Seedance 1.0 is ByteDance’s new flagship text‑ and image‑to‑video model. It was unveiled in June 2025 alongside the company’s Doubao 1.6 language suite and is already live inside ByteDance’s Volcano Engine cloud as well as on Neural Frames. The public tech sheet highlights four things:

- 1080‑pixel output at 24 fps with rich textures and color depth.

- Time‑causal VAE + decoupled spatio‑temporal Transformer architecture.

- Video‑specific RLHF for prompt accuracy, motion realism and aesthetics.

- Aggressive multi‑stage distillation that makes inference “GPU‑cheap.”

Put simply: it follows your prompt, keeps the physics believable, and looks gorgeous while doing it - without the usual 5‑minute wait.

Why is it so fast (and cheap)?

- Model compression. ByteDance distilled a monster teacher model down to a nimbler student, shaving ~10× off inference time.

- Two‑stage pipeline. A 480 p “draft” is created first; a refiner then upsamples to Full HD. This keeps heavy attention layers off the largest tensors.

- GPU‑friendly scheduling. The diffusion schedule was redesigned so that later timesteps can be merged or skipped when latency matters.

- Cloud economics. Volcano Engine prices Seedance at ≈ 3.67 ¥ (about fifty US cents) per 5‑second HD render—an aggressive play that undercuts Western rivals by ~70 %.

For Neural Frames users who routinely batch‑render 3-minute-songs, that means a bill that used to be $30 is dropped into low single digits.

An AI music video made with Seedream

How does it look in the editor?

- Multi‑shot mode – Seedance can output several cohesive shots in one pass; think “wide establishing → mid‑shot → slow‑motion close‑up” without having to splice separate generations.

- Motion range – From subtle facial twitches to acrobatic camera moves; the time‑causal layers keep everything fluid.

- Style breadth – Photorealism, cel‑shaded anime, felt‑texture stop‑motion—Seedance handles them all by virtue of dense caption training.

Seedance vs. other heavyweights

|

Seedance 1.0 |

OpenAI Sora Turbo |

Runway Gen‑4 Turbo | |

|---|---|---|---|

|

Max resolution |

1080p |

1080p |

720p (upscales to 4 K) |

|

Typical clip length |

5 s (multi‑shot) |

20 s |

5 or 10 s |

|

Single‑clip latency |

~41 s |

“Up to a minute” on Plus; longer under load |

25–50 credits ≈ 1‑2 min at 720p |

|

Cost (HD 5‑s) |

≈ $0.50 |

Part of $20/$200 ChatGPT tiers; no per‑clip price |

25–60 credits (~$0.75–1.50) |

|

Prompt fidelity |

High (RLHF reward on motion + semantics) |

Good, but physics still shaky |

Medium; needs image anchor for consistency |

|

Multi‑shot support |

Native |

Storyboard tool (manual) |

Requires separate renders |

A great overview on Seedance 1.0

Why music‑video makers should care

- Iterate like a live VJ. 40‑second turnaround makes “prompt‑jam‑prompt” creative loops feasible during a studio session.

- Multi‑shot narrative unlocks mini‑stories, not just looping backdrops. Think full TikTok hooks or Spotify canvases with cold‑open → chorus drop cuts in one render.

- Budget‑proof experimentation. At fifty cents a pop, you can afford to A/B 30 variations of that synth‑wave neon skyline and keep the best one.

- Time to market. Faster renders mean content lands while a track is still trending—crucial for the algorithmic zeitgeist.

Seedance 1.0 feels like the moment AI video leapt from “neat demo” to “production-ready workhorse.” And because the ultra-fast Seedance Lite engine is already live inside Neural Frames, you don’t have to wait for the future - you can render crisp multi-shot clips in seconds right now. Give it a spin, share your best results with us, and you might just see your video featured in our community gallery.